rub ranking is a method or system for ordering or rating items, participants, or entities according to certain criteria—to “rank” them in a way that reflects value or performance. The word “rub” here is neutral; it doesn’t necessarily denote a particular domain, but the concept can be adapted to many contexts. In simpler terms, rub ranking means building a ranking mechanism that uses rules, metrics, or data to decide which item is better, more relevant, or higher than another.

Why does rub ranking matter? Because in many systems—especially online, gamified, educational, or competitive environments—some way to sort or rank is needed. Without a good ranking, users may feel the system is arbitrary, unfair, or opaque. A robust rub ranking gives structure, clarity, and direction.

This article explores rub ranking in depth: how it works, how to design one, where it’s used, what pitfalls to avoid, and strategies to improve your ranking outcomes. Whether you’re a game designer, developer, platform operator, educator, or researcher interested in ranking systems, you’ll find both theory and practical tips here.

rub ranking in different domains

rub ranking isn’t limited to one field. In fact, its utility lies in its adaptability. Here are several domains where rub ranking shows up:

- Gaming: Many multiplayer or competitive games use ranking systems (e.g. “levels,” “elo,” tiers). A “rub ranking” variant might combine wins, participation, skill, and consistency.

- Education / Learning Platforms: Courses or assessments might rank students or modules, e.g. by performance, participation, improvements.

- Business / Performance Reviews: Employees or products might be ranked by metrics like sales, quality, customer feedback.

- Social Media / Content Platforms: Posts, creators, or comments can be ranked by engagement, upvotes, reach.

- Talent / Recruitment Platforms: Candidates may be ranked by skills, test scores, reviews, experience.

In each domain, rub ranking must adapt to the specific metrics, culture, and expectations. What works for a competitive game may not work well in education or reviews—but the core principles are similar.

How rub ranking works

At its heart, a rub ranking system takes inputs (metrics, scores, weights) and outputs a rank or score for each entity.

- Collect metrics / data

These could be raw counts (number of wins, number of upvotes), rates (accuracy, completion rate), temporal data (recency), or even qualitative scores (peer review). - Normalize or scale metrics

Because different metrics may be on different scales, you often convert them to a common scale (e.g. 0–1 or 0–100). Sometimes you apply logarithms or other transformations. - Assign weights

Each metric may not have equal importance. For example, in a content platform, you may weigh upvotes at 50%, shares at 30%, comments at 20%. - Compute composite score

Sum (or combine) weighted normalized metrics to get a single score per entity. - Generate rank order / tiers

Based on composite scores, you sort items. You may also assign buckets or tiers (e.g. gold, silver, bronze) rather than strict linear order. - Handle ties & edge cases

Two items may have the same score; you’ll need tie-breaking rules (e.g. earliest submission wins, secondary metric). Also deal with missing data, outliers, or extremes (very low or high values). - Update the ranking over time

Input metrics change: new votes, new results, aging effects (older data may weigh less). So ranking must be dynamic or periodically recomputed.

Thus, a rub ranking transforms diverse data into a ranking. The art lies in choosing metrics, weights, transformations, and update rules.

You May Also Like: Milyom

Key factors that influence rub ranking

When designing or analyzing a rub ranking, certain factors tend to dominate or shift outcomes:

- Quality vs quantity: If you weigh sheer volume heavily, prolific but low-quality actors may dominate. If you favor quality, you might miss consistent contributors.

- Consistency: Entities that maintain performance across time often should rank high.

- Recency / freshness: Newer actions or metrics may deserve more weight than old ones.

- Engagement or depth: A comment vs like; watch time vs click. Some actions are more meaningful.

- Speed or responsiveness: In some systems, faster performance (response time) matters.

- Difficulty or level: If tasks or challenges vary in difficulty, you may need to normalize or adjust metrics accordingly.

- Confidence or reliability: A rating based on few data points is less trustworthy; you may discount or penalize low sample sizes.

Balancing these is key: too much bias in one direction leads to distortions.

Types of rub ranking systems

Depending on goals, you can choose various structures:

- Linear model: Score = w₁·m₁ + w₂·m₂ + …

- Exponential or non-linear: Some metrics influence score exponentially (e.g. “boost” for viral performance).

- Tiered / bucketed: Instead of continuous ranking, assign tiers (e.g. beginner, intermediate, expert).

- Hybrid approaches: Use linear combination for base score, then add bonuses or penalties (boosts, caps).

- Percentile ranking: Ranking relative to population (e.g. 90th percentile).

- ELO / TrueSkill style: Pairwise comparisons and rating adjustments based on match outcomes (often used in gaming).

Each type has tradeoffs: linear is simple but coarse, exponential can skew extremes, percentile depends on population distribution, etc.

Designing a rub ranking system

To build a good rub ranking system, follow a thoughtful design process:

- Define your goals

What behavior do you want to encourage? What qualities do you want to reward? - Choose metrics that align

Metrics should reflect desired performance, not easily gamed proxies. - Decide on weighting and scaling

Use domain knowledge or experiments to set weights. - Ensure fairness, transparency, interpretability

Users prefer systems they can understand (or at least trust). - Plan for updates and dynamics

Data changes over time; design refresh cycles or real-time updates. - Test with real or simulated data

Use past data to simulate how ranking would behave. - Monitor and tweak

After launch, collect feedback, identify anomalies, and adjust. - Provide feedback to users

Let them see why they rank where they are (metrics, progress). - Guard against exploitation

Recognize that users may attempt to optimize or game the system; design resilience.

In short: treat it like a product feature, not a one-time calculation.

Mathematical models behind rub ranking

Some mathematical techniques commonly appear in rub ranking:

- Normalization: e.g. min-max scaling to [0,1]: (x – min)/(max – min)

- Z-score standardization: subtract mean, divide by standard deviation

- Logarithmic scaling: compress large values: score = log(x + 1)

- Sigmoid or logistic transforms: to bound extreme values

- Weighted sum / linear combination

- Non-linear combinations: interactions (m₁ × m₂), exponentials

- Decay functions: weight(t) = e^(−λ·age) or linear decay

- Ranking percentiles: compute where an item lies relative to distribution

- Pairwise rating models (ELO, TrueSkill): updating scores based on pairwise competitions

These tools help you manage scale differences, outliers, and time effects.

Benefits of using rub ranking

When done well, a rub ranking brings many advantages:

- Motivation & progress signals: Users see where they stand and how to improve

- Clarity and structure: Transparent orders help users know direction

- Comparability: You can meaningfully compare entities

- Incentives: Encourages behaviors aligned with your goals

- Filtering and discovery: High performers rise, helping others find quality

- Feedback loops: Users can react, improve, and re-rank

These benefits support engagement, fairness, and system health—if the ranking is well designed.

Challenges and pitfalls in rub ranking

But beware: many ranking systems fail or backfire for these reasons:

- Gaming / manipulation: Users find loopholes or optimize for the metric, not real value

- Bias and unfairness: Some groups may be disadvantaged by design

- Data sparsity: New or less active entities get penalized unfairly

- Cold start issue: Without data, ranking new entrants is hard

- Overemphasis on popular items: “Rich get richer” effect: popular items gain more visibility and thus more engagement

- Opacity / distrust: If users don’t understand how ranking works, they may distrust or criticize it

- Unintended incentives: If ranking rewards a side metric, users may do that at expense of quality

- Rigidity: If the ranking system is hard to change, it may fail when context shifts

Designers must anticipate and mitigate these risks.

Ethical considerations in rub ranking

Ranking systems carry ethical weight:

- Fairness and bias: Ensure your ranking doesn’t systematically disadvantage groups

- Transparency: Where possible, show how ranking is computed or allow checks

- Privacy: Use metrics that don’t invade privacy

- Avoiding manipulation: Design to resist forceful exploitation

- Accountability: Be ready to explain or revise your system if harms emerge

- Responsibility: Recognize that ranking impacts people—ranked low may discourage or harm reputations

Ethics isn’t optional; it’s essential in systems that affect real lives or livelihoods.

rub ranking and user psychology

A ranking system is not just math—it’s human psychology:

- Feedback loops: Seeing rank movement gives motivation

- Loss aversion: Dropping in rank may feel worse than gaining feels good

- Anchoring and framing: The presence of tiers influences perception

- Goal gradient effect: People accelerate efforts when close to next rank

- Social comparison: Users compare themselves to neighbors

- Motivated behavior: Some may “game” the system more than perform real value

Understanding these psychological dynamics helps you better design incentives and guard rails.

Improving your rub ranking

If you have an existing ranking or are building a new one, here are strategies:

- Regular calibration: Periodically re-assess weights and thresholds

- Use hybrid signals: Combine short-term and long-term metrics

- Introduce decay / time weighting: So stale performance fades

- Confidence intervals / margin of error: For low-data items, adjust scores conservatively

- Tiered progression: Let users move gradually (e.g. promotion thresholds)

- User feedback & appeals: Allow users to question ranking or see breakdowns

- Anomaly detection: Detect suspicious manipulation patterns

- A/B experiments: Try alternative weightings in controlled tests

Over time, with careful iteration, the ranking will improve and stabilize.

rub ranking in online platforms

Many modern platforms use ranking in one way or another; here’s how rub ranking might appear:

- Content platforms: Rank posts by a composite of upvotes, shares, comments, comment depth

- Review sites: Rank businesses or reviews by score, recency, helpfulness votes

- Leaderboard systems: Users climb leaderboard based on activity, achievements

- Gamified learning: Rank students by progress, quiz accuracy, peer feedback

- Marketplaces: Rank sellers by rating, sales volume, reliability

In each case, rub ranking helps structure discovery, reward engagement, and enforce quality.

Case study: rub ranking in gaming

Consider a competitive multiplayer game that wants to rank players. A rub ranking system might combine:

- Win/loss ratio

- Match difficulty

- Frequency of play

- Behavior metrics (was the player penalized, reported?)

- Consistency over time

You might assign weights: wins 50%, match difficulty 20%, consistency 15%, behavior metrics 15%. Use decay so older matches count less. You also apply tie-breakers (e.g. fastest victories). The result: a dynamic ranking showing top players, incentivizing fair play and consistent performance.

Pitfalls: if you overemphasize win count, players may collude or play safe matches. If you undervalue behavior metrics, toxic players may still rank high.

Case study: rub ranking in education or learning platforms

Imagine a learning platform where students complete modules, take quizzes, leave reviews, and help peers. A rub ranking might include:

- Quiz accuracy

- Number of modules completed

- Time spent learning

- Peer reviews (helpfulness)

- Improvement over time

Weights might favor progress and improvement, not just raw scores. This ranking can encourage continuous learning rather than cramming.

But beware: some learners may game low-difficulty modules repeatedly, or collaborate unfairly. Mitigation: cap repetitive attempts, monitor suspicious patterns, normalize by difficulty.

Comparisons: rub ranking vs other ranking systems

How does rub ranking differ from alternatives?

- Star / rating systems: Simple (1–5 stars), but non-composite and coarse.

- Percentile ranking: Places you relative to population; good for comparisons, but not absolute scores.

- ELO / TrueSkill / rating systems: Based on pairwise matches and predictive updates; more complex but good for head-to-head.

- Bucket / grade systems: Use discrete tiers (A, B, C) instead of continuous ranking.

- Machine Learning rankings: Use models to predict desirability or relevance; black-box but powerful.

rub ranking often lies between simple ratings and full ML ranking—offering interpretability and flexibility.

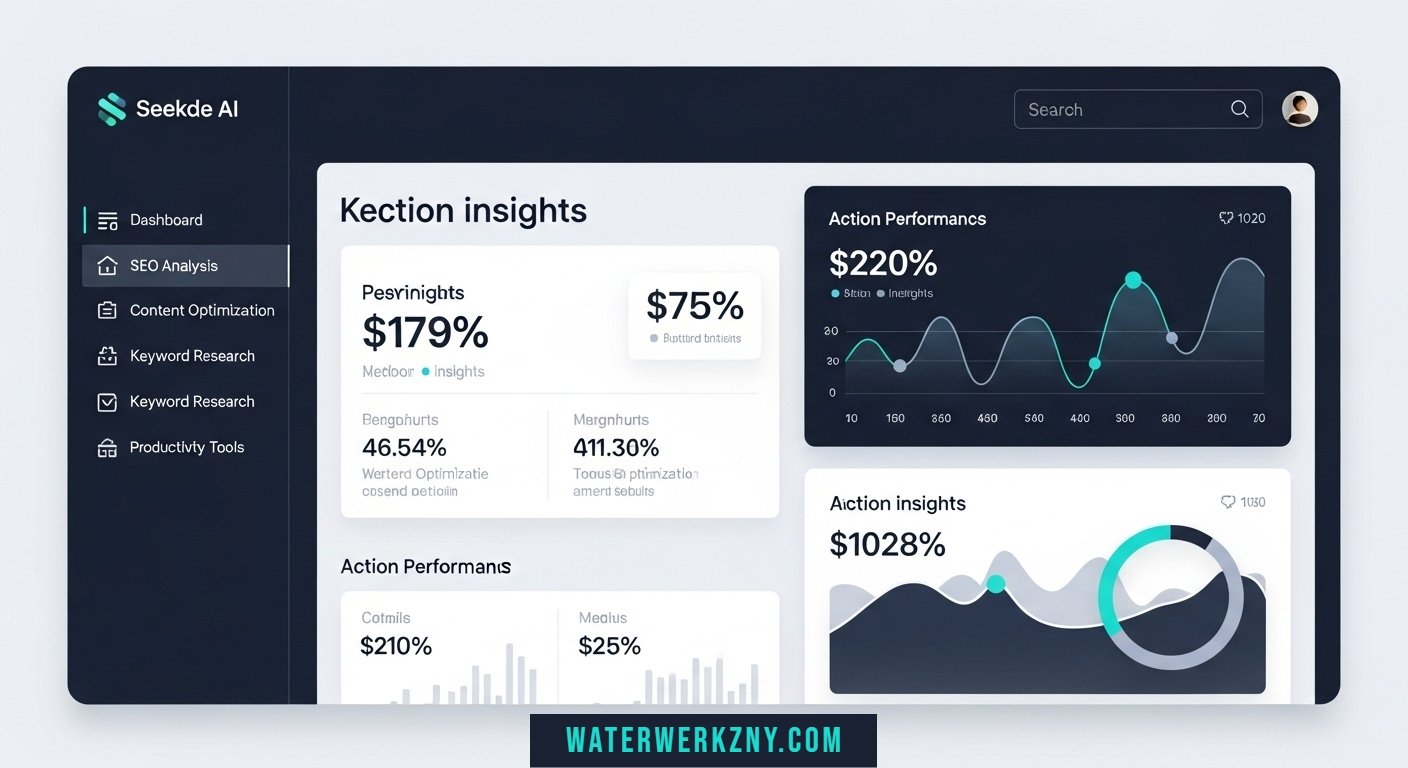

Tools and software for rub ranking

You don’t have to build from scratch. Some tools and libraries help:

- Ranking / scoring libraries in Python, R, or JS (customizable)

- Analytics / BI platforms (Tableau, Looker) for visualizing rank behavior

- Databases / query systems with window functions (SQL ranking functions)

- Machine learning platforms for predictive ranking

- A/B testing tools (Optimizely, Google Optimize) for comparing ranking versions

Often you glue a ranking formula into your backend or analytics pipeline.

Evaluating a rub ranking’s performance

How do you know if your ranking is “good”? Use these evaluation criteria:

- Predictive validity: Does ranking predict future success or engagement?

- User satisfaction: Do users trust and accept the ranking?

- Fairness metrics: Does the ranking treat similar participants alike?

- Stability / volatility: Is ranking overly jumpy or insensitive?

- Resistance to manipulation: Are there exploit patterns?

- Coverage / inclusivity: Do newcomers or low-data items get reasonable exposure?

Use metrics, logs, and user surveys to assess.

Adjusting and tuning rub ranking systems

No ranking is perfect from day one. Here’s how to refine:

- Iterative updates: Try small changes and measure effects

- Threshold shifts: Move the cutoffs for tiers or classes

- Weight rebalancing: Adjust relative importance of metrics

- Decay rate tuning: Change how fast past data loses weight

- Introduce guard rails: Caps, floors, smoothing functions

- Feedback mechanisms: Solicit user feedback or monitoring alerts

- Rollback strategies: If a change backfires, revert safely

Each adjustment must be tested, monitored, and documented.

rub ranking and fairness across groups

A major concern: ranking may disadvantage certain groups (e.g. novices, underrepresented users). To mitigate:

- Normalization by group characteristics: So raw metrics don’t penalize inherent constraints

- Fairness constraints: Force representation minima or parity

- Audit bias: Regularly check for disparities or group skew

- Blind scoring: Remove identity attributes from metrics

- Feedback / appeals: Let users contest unfair placements

- Progressive exposure: Give low-ranked users visibility occasionally

Fairness is not optional; it’s part of system design.

Future trends in rub ranking

Looking ahead, rub ranking will evolve:

- AI / ML hybrid ranking: Ranking formulas augmented by learned models

- Dynamic / adaptive ranking: Weights change automatically per user or context

- Explainable ranking: Users see detailed, understandable breakdowns

- Gamification + personalization: Rankings tailored to individual goals

- Decentralized / blockchain ranking: Transparent, verifiable ranking systems

- Federated or collaborative ranking: Multiple systems feeding into joint ranking

As data and expectations grow, ranking systems will become more fluid, fair, and intelligent.

Best practices for implementing rub ranking

To make your rub ranking more robust:

- Document the formula and reasoning

- Maintain transparency (where feasible)

- Monitor and log ranking decisions

- Provide user feedback on rank and metrics

- Use fallback or default rules for missing data

- Protect against extreme outliers

- Allow adjustment and feedback

- Start simple, iterate gradually

- Educate stakeholders and users

Treat the ranking as a living system.

Common mistakes and how to avoid them

Here are pitfalls to avoid when building or deploying rub ranking:

- Overcomplicating: too many metrics or convoluted weightings

- Ignoring edge cases: missing data, ties, zeros

- Letting a single metric dominate

- Making rules opaque

- Setting weights without testing or domain insight

- Not anticipating gaming or abuse

- Freezing the system post launch

- Ignoring fairness or bias

Being aware of these helps you steer clear.

Conclusion

In sum, rub ranking is a powerful concept and tool for creating structured, meaningful ordering of entities in systems where mere popularity or raw counts would fall short. Whether you’re building a competitive game, educational environment, content platform, or performance review system, understanding how to design, implement, evaluate, and iterate a rub ranking can make the difference between a fair, trusted system and one that fosters frustration, gaming, or bias.

You’ve seen how rub ranking works, what factors influence it, how to build it, what pitfalls to avoid, and how trends may shape its future. But the most important insight is this: a ranking system is not static. It must evolve, reflect your goals, and respect users. If done thoughtfully, rub ranking can drive engagement, fairness, clarity, and improvement in your system.